Human Insights: 9 of the Brightest Minds in AI on Where We

Are and Where We Will Be. The impact and level of disruption will be staggering, but the pace of change will be less swift than you'd expect.

BY JENNIFER CONRAD, BEN SHERRY

It's not hyperbolic to say that artificial intelligence has changed the game. In the aftermath of the explosive release of ChatGPT in November 2022, people struggled to grasp the implications of being able to casually communicate with seemingly omnipotent technology, and they had a lot of fun playing with it. Simple prompts given to OpenAI's large language model furnished best-man speeches, haikus, and business plans. Then things got serious. In January 2023, ChatGPT passed the U.S. Medical Licensing Exam. In June, a lawyer was censured for using ChatGPT with embarrassing results. Google, suddenly back on its heels, scrambled to build a competitive product, while Amazon--not to be outdone--launched its own tool in short order.

All the while, venture capitalists were stress-testing their portfolio companies to determine how they'd hold up to AI disruption. Some VCs have even put the screws to their portfolio companies' vendors. "You have to be really thoughtful about how this is going to impact not just your own business, but the people you're relying on, your dependencies," Kaitlyn Holloway, founding partner at Alexis Ohanian's venture capital firm 776, told Inc. last April. Around that time, Holloway found herself asking the firm's portfolio companies, are their packing peanuts suppliers "using AI to be better, faster, stronger? Are they going to fall out of the game because they aren't keeping up with the latest technology?" These are provocative questions--and ones that you, too, should be asking, along with: How can AI be helpful? And what could go wrong? Here, some of the brightest business leaders and most-admired technology and design experts from across the spectrum weigh in on the answers.

Deon Nicholas

Co-founder and CEO of Forethought, an AI-powered customer-support service provider based in San Francisco

"Going forward, you're going to see a big distinction between AI chatbots and AI agents. The way a chatbot works is that you ask it questions and it gives you its best attempt at an answer. Agents, on the other hand, can go further and take action on your behalf. There's a technique called chain of thought that essentially allows the AI to repeatedly query itself to get to a decision, so if you were developing a customer service agent, for example, you could describe a problem, give it a bunch of options for potential actions to take, and then watch as it reasons out which action to take and why."

Ben Schreiner

Head of business innovation and go-to-market for U.S. commercial sales at Amazon Web Services

"A lot of businesses are just taking the technology and then looking for a problem to solve--like a hammer looking for a nail. I've been telling customers to not focus on the technology, but to focus on the problem and then work backward. Ask yourself, 'What do I spend the most money on? What do I spend the most time on? What do my customers complain about most often?' If you can move the needle on one or more of those elements with automation, you can change the trajectory of your company."

Renée Richardson Gosline

Head of the Human-First AI group at MIT's Initiative on the Digital Economy

"Generative AI creates the most probable answers as found on the internet. That means two things: One, if you're talking about strategy, it's actually probably not the place you want to go. Sometimes, I use generative AI to see what not to do to be innovative. But it will help bring you up to speed on the prevailing approaches in a category--novices can learn quickly using generative AI. The other thing is that because it's just using statistics to say this is what is most common, it can be trained with biased or harmful content. And there's a feedback loop--the more content that's generated by generative AI, the more generative AI is trained on content generated by generative AI. It becomes this thing where GAI is quoting GAI."

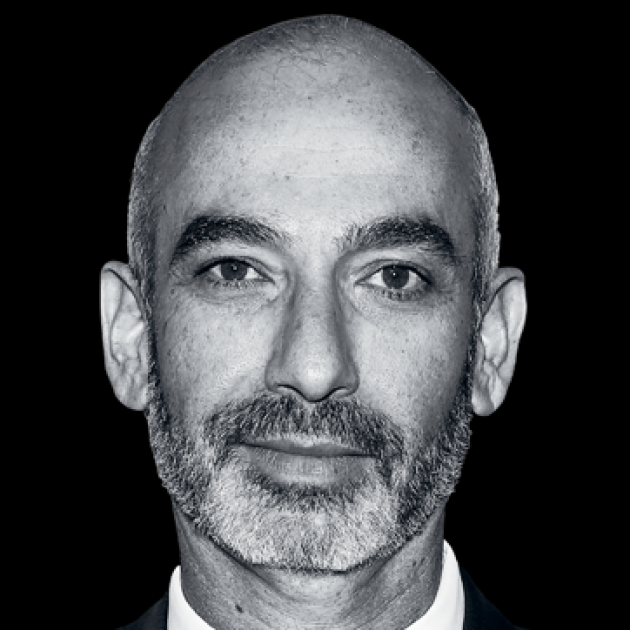

Gadi Amit

President and founder of San Francisco's strategic technology design studio NewDealDesign

"I don't think the fear and discomfort of humans using AI, with all its peculiarities and deficiencies today, is being addressed. People are driving Teslas and, every once in a blue moon, you need to grab the steering wheel. It's been talked about in kind of a nonchalant way, like it's not a big deal. It's a really big deal. The first thing is just to make sure we understand that those deficiencies are a real technical problem and also a very deep trust issue for humans. I think a lot more people from the humanities and arts should be dealing with this issue, and fewer people with computational degrees. I honestly am concerned about that."

Peggy Tsai

Chief data officer at BigID, a data intelligence platform based in New York City

"After initially building these large language models, companies didn't see results in ROI in terms of accuracy, efficiency, and overall impact.

So there's been a shift to building small language models. These are very domain-specific, or for a specific purpose, such as HR. Companies can cut costs in terms of the computing power because of the size of the model that's needed. And they can assure higher data quality, because they're feeding only very specific data sources and information into the language models. What we're looking for now is for AI not to be a shiny-new-toy technology. It's going to be embedded into everyone's responsibilities."

Meredith Whittaker

President of the private-messaging app Signal and co-founder of the AI Now Institute at NYU

"There's a lot of pressure coming from the scant handful of actors who can produce and deploy these systems at scale to license their technology, sign multiyear cloud contracts, and otherwise make use of AI, which translates to profits and market share for Google, Microsoft, Amazon, and the other AI giants. Whether it's actually useful for businesses is very unclear."

Kevin Guo

Co-founder and CEO of Hive, a San Francisco-based deep-learning startup, known for its AI-powered image-recognition system

"If you're a business owner, you should definitely get familiar with AI, but I don't think it's going to be as fast a transition for all industries as the internet was. This technology is just much slower to develop. Now, there are some industries, like customer support, that are already being radically changed by generative AI, and over the long term, I think you'll start to see stuff like fully AI-driven medicine and diagnoses. The impact of the change will be as large as the internet, if not greater, but it's going to take decades."

Bob Muglia

Author of The Datapreneurs, ex-Microsoft exec, and former CEO of Snowflake

"Most companies are going to adopt AI technologies in the products they buy. Nobody should have to build their own product support chatbot. That should be a feature of Zendesk or Salesforce. It's relatively straightforward to leverage product support conversations you've had with your customers in a knowledge base and to be able to answer questions for end users. I think you could eliminate a lot of first-level product support through that."

Kylan Gibbs

Chief product officer and co-founder of Inworld AI, a Mountain View, California-based startup creating tools to build NPCs powered by generative AI

"AI is going to make conceptual iteration so much faster. In game design, a writer might have to go through something like 70 people before they ever get a sense of how their idea actually feels to play, but by uploading some concept art and a description of the scenario, you can get a low-level interactive experience pretty quickly. Getting that feedback earlier can save teams a lot of time."

Photo Credit: Inc Art.